This is a follow-up to 2 posts I wrote in the past. To avoid index fragmentation and the issues I had with sets in the past I implemented 2 SQL Jobs to keep them clean.

Speed up FIM 2010 R2 SQL performance by rebuild/reorganize indexes

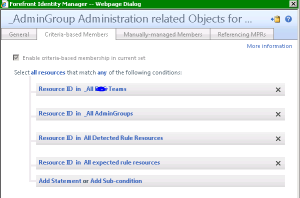

FIM 2010 R2: SQL timeout on using large sets in other sets

For around a month now the following to SQL jobs work perfectly in my customer’s environment even in production, so I think it seems to be safe to implement this, but you should test them on your own.

By default both SQL jobs run weekly on every sunday.

The first SQL job starts a PowerShell script to maintain the indexes on all tables of the FIMService database. The script works only on indexes with fragmentation higher than 20% and will only rebuild 100 indexes per schedule (You can adjust this in the script).

The PowerShell script is not my work, see author information in the scripts URL.

Make sure you replace the DOMAIN\USER and SERVERNAME placeholder in the script to values that fits to your environment.

USE [msdb]

GO

/****** Object: Job [Index Rebuild on all FIMService tables] Script Date: 11/17/2013 14:08:12 ******/

BEGIN TRANSACTION

DECLARE @ReturnCode INT

SELECT @ReturnCode = 0

/****** Object: JobCategory [[Uncategorized (Local)]]] Script Date: 11/17/2013 14:08:12 ******/

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'[Uncategorized (Local)]' AND category_class=1)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'[Uncategorized (Local)]'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'Index Rebuild on all FIMService tables',

@enabled=1,

@notify_level_eventlog=0,

@notify_level_email=0,

@notify_level_netsend=0,

@notify_level_page=0,

@delete_level=0,

@description=N'No description available.',

@category_name=N'[Uncategorized (Local)]',

@owner_login_name=N'DOMAIN\USER', @job_id = @jobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Index rebuild step] Script Date: 11/17/2013 14:08:12 ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Index rebuild step',

@step_id=1,

@cmdexec_success_code=0,

@on_success_action=1,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'PowerShell',

@command=N'PUSHD SQLSERVER:\SQL\SERVERNAME\DEFAULT

# PowerShell script iterated over tables in database, gathers set of indexes

# then for every index, gathers all partitions and performs RebuildIndex on that partition

# To execute this script:

# Launch SQL PowerShell ( Start -> Run -> sqlps.exe)

# in Powershell window CD SQL\machine_name\instance_name; Example: CD SQL\demo-machine\DEFAULT

# Copy the following script and paste it in SQL powershell window to run this script

# http://sethusrinivasan.com/2012/02/14/index-rebuild-on-large-database-sql-agent-powershell-job/

# following variables can be updated

# database Name

$dbName = "FIMService"

# number of indexes to rebuild, script terminates after Rebuilding specified number of indexes

$indexesToProcess = 100

# fragmentation threshold - indexes with fragmentation less than this value will be skipped

$fragmentationThreshold = 20

$processedIndex = 0

$tables = dir Databases\$dbName\Tables

"Listing all tables from Database:" + $dbName

foreach($table in $tables)

{

" Listing Indexes for Table:" + $table

foreach($index in $table.Indexes)

{

" Listing Physical Partitions for Indexes:" + $index

foreach($partition in $index.PhysicalPartitions)

{

$fragInfo = $index.EnumFragmentation([Microsoft.SqlServer.Management.Smo.FragmentationOption]::Sampled,

$partition.PartitionNumber)

$fragmentation = $fragInfo.Rows.Item(0)["AverageFragmentation"]

" Checking fragmentation on " + $index.Name + " is greater than :" + $fragmentationThreshold

" Current Fragmentation:" + $fragmentation

" Paritition:" + $partition.PartitionNumber

if($fragmentation -gt $fragmentationThreshold)

{

" Rebuilding Index: " + $index.Name + " partition:" + $partition.PartitionNumber

$processedIndex = $processedIndex + 1

if($index.IsPartitioned -eq $True)

{

$index.Rebuild($partition.PartitionNumber)

}

else

{

$index.Rebuild()

}

}

if ( $processedIndex -ge $indexesToProcess)

{

break

}

}

if ( $processedIndex -ge $indexesToProcess)

{

break

}

}

if ( $processedIndex -ge $indexesToProcess)

{

break

}

}

POPD',

@database_name=N'master',

@flags=48

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id = 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule @job_id=@jobId, @name=N'Index rebuild weekly',

@enabled=1,

@freq_type=8,

@freq_interval=1,

@freq_subday_type=1,

@freq_subday_interval=0,

@freq_relative_interval=0,

@freq_recurrence_factor=1,

@active_start_date=20120214,

@active_end_date=99991231,

@active_start_time=130000,

@active_end_time=235959,

@schedule_uid=N'69a997b3-6475-4c18-bd87-9f4cf27e687a'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name = N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

COMMIT TRANSACTION

GOTO EndSave

QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

GO

The second SQL job uses the SQL internal function to do a rebuild on the FIMService full text catalog. You can user this SQL script or the build-in wizard to create this job.

If using this script make sure to replace the DOMAIN\USER placeholder to values that fits to your environment.

USE [msdb]

GO

/****** Object: Job [Start Optimize Catalog Population on FIMService.ftCatalog] Script Date: 11/17/2013 14:13:07 ******/

BEGIN TRANSACTION

DECLARE @ReturnCode INT

SELECT @ReturnCode = 0

/****** Object: JobCategory [Full-Text] Script Date: 11/17/2013 14:13:07 ******/

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'Full-Text' AND category_class=1)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'Full-Text'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'Start Optimize Catalog Population on FIMService.ftCatalog',

@enabled=1,

@notify_level_eventlog=2,

@notify_level_email=0,

@notify_level_netsend=0,

@notify_level_page=0,

@delete_level=0,

@description=N'Scheduled full-text optimize catalog population for full-text catalog ftCatalog in database FIMService. This job was created by the Full-Text Catalog Scheduling dialog or Full-Text Indexing Wizard.',

@category_name=N'Full-Text',

@owner_login_name=N'DOMAIN\USER', @job_id = @jobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Full-Text Indexing] Script Date: 11/17/2013 14:13:07 ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Full-Text Indexing',

@step_id=1,

@cmdexec_success_code=0,

@on_success_action=1,

@on_success_step_id=-1,

@on_fail_action=2,

@on_fail_step_id=-1,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'USE [FIMService]

ALTER FULLTEXT CATALOG [ftCatalog] REORGANIZE

',

@database_name=N'master',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id = 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule @job_id=@jobId, @name=N'Weekly FIM.ftCatalog rebuild',

@enabled=1,

@freq_type=8,

@freq_interval=1,

@freq_subday_type=1,

@freq_subday_interval=0,

@freq_relative_interval=0,

@freq_recurrence_factor=1,

@active_start_date=20131024,

@active_end_date=99991231,

@active_start_time=120000,

@active_end_time=235959,

@schedule_uid=N'6ba433a3-79eb-4552-ba0b-5f1cc9d5dc1b'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name = N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

COMMIT TRANSACTION

GOTO EndSave

QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

GO